Identity and Access Management in the Age of AI Agents

How to Avoid the Paperclip Apocalypse

As we look toward 2025, the tech landscape is set to be reshaped by transformative trends that will redefine business operations, consumer experiences, and digital infrastructure.

According to Gartner’s latest study, the most significant trend is the rise of Agentic AI.

The evolution of artificial intelligence has reached a new frontier with AI agents. These agents are not merely co-pilots; they are dynamic systems that can make decisions and carry out tasks independently.

AI agents are poised to become fundamental in business operations. However, this rise comes with crucial challenges, particularly in the field of Identity and Access Management (IAM), as organizations must now secure both human and AI entities within their networks.

What’s an AI Agent?

AI agents are distinguished from simpler AI tools by their sophisticated architecture and capacity for independent action. Their core components work in unison to accomplish objectives without constant user input, enabling the agents to address open-ended tasks and navigate dynamic digital environments.

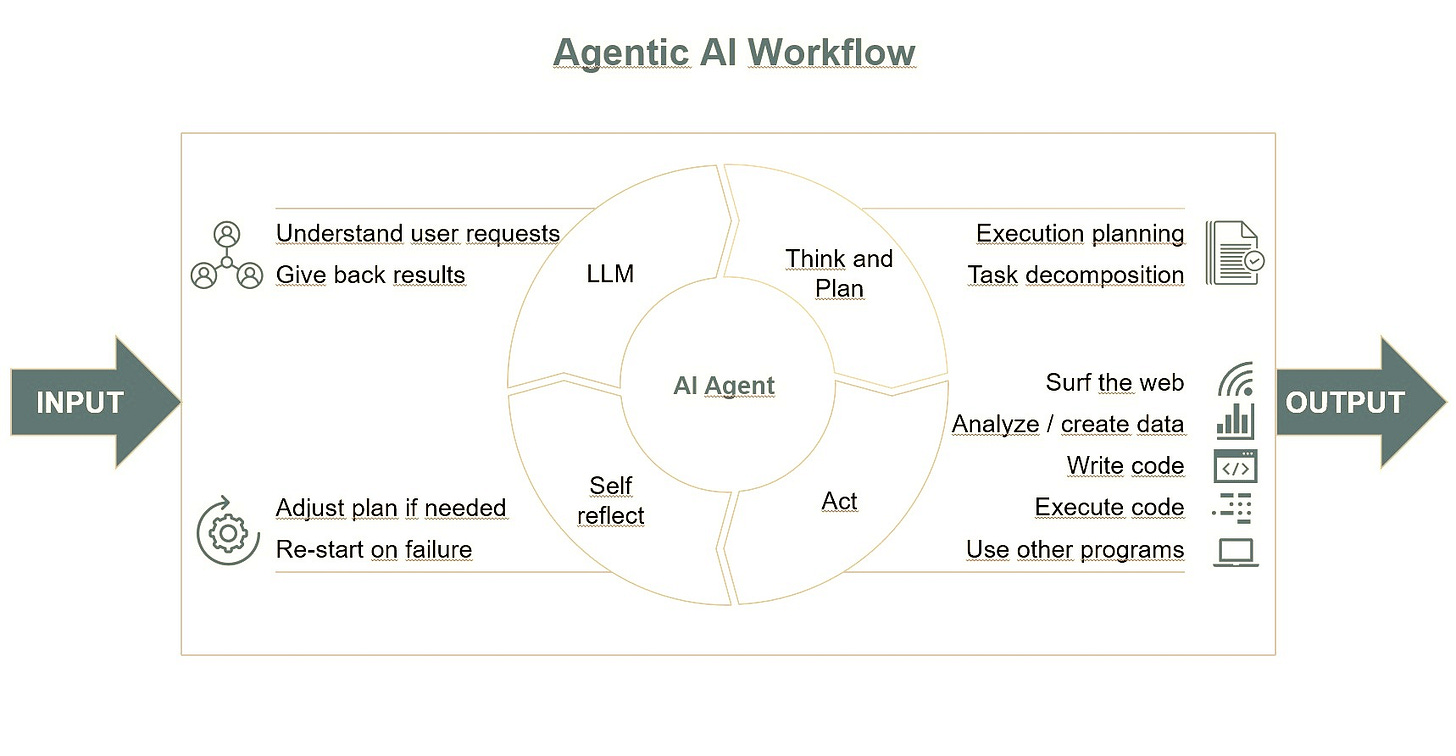

This chart illustrates a streamlined workflow powered by an AI Agent.

The key elements of an advanced AI agent include:

1. Large Language Models (LLMs)

Advanced LLMs serve as the “brain” of AI agents, interpreting user intent, understanding complex prompts, and generating feedback for the users based on the results. LLMs are also taking responsibility for creating the action plans. These language models power the decision-making capabilities that set agents apart from conventional AI.

2. Toolset Integration

AI agents extend their capabilities through specialized tools that allow them to interact with web browsers, retrieve documents, write and execute code, query databases, analyse and create different type of data (incl. text, sound, images, videos and complex datasets).

3. Memory and Retention

Agents possess both long-term and short-term memory. Long-term memory stores access to databases and knowledge repositories, while short-term memory retains context throughout a multi-step task, enabling the agent to “remember” previous steps and avoid redundant actions.

4. Reflection and Self-Critique

Advanced agents are built with mechanisms for self-evaluation. If an agent encounters issues, it can alter its plan and reassign tasks to ensure successful completion. It can also detect if it breaks down or makes a failure, and can re-start itself or create corrective actions.

Everyday there is an announcement about a new AI Agent.

Anthropic's Claude has now the "Computer use" feature, which enables it to use any computer program like a human with keyboard and mouse.

Microsoft recently released its Magentic One, which is - in contraty to its name - a multi-agent solution.

Its architecture is interesting and showcases how Microsoft implemented the above mentioned general model.

Microsoft’s Magentic-One incorporates several distinct agents, including:

Orchestrator: The lead agent responsible for decomposing tasks, creating action plans, and directing other agents to fulfill subtasks.

WebSurfer: An LLM-based agent that can browse the web, navigate pages, click links, and retrieve information by accessing a Chromium-based browser environment.

FileSurfer: A file navigation agent that accesses and organizes local files.

Coder: An agent specialized in writing and analyzing code, supporting automated programming and artifact generation.

ComputerTerminal: A console interface that executes code written by the Coder and installs programming libraries as needed.

Through this multi-agent architecture, Magentic-One’s Orchestrator oversees each step of a task, maintaining a Task Ledger to track its plans and a Progress Ledger to monitor completion. When a task stalls, the Orchestrator can revise its approach by updating these ledgers, providing a level of adaptive intelligence that goes beyond single-turn interactions.

From Chatbots to Sophisticated AI Agents

AI agents represent a leap from traditional chatbots and co-pilots, which handle only single-turn interactions. Unlike simple chatbots, which might merely summarize, complete or generate text, agents can manage complex, multi-step workflows. For instance, a request to “find and purchase the best running shoes” involves multiple lyers of actions—searching, comparing, selecting, and buying—each of which an agent like Magentic-One can handle autonomously.

The difference between simple LLMs and complex AI Agents is illustrated in this chart below:

It’s an interesting observation that different agents can stabilize and strengthen the functioning of AI agents when working collaboratively. For instance, if we create an environment with a "business manager," a "business analyst," a "software developer," and a "tester" agent, and assign them the task of developing new software, they can communicate with each other to create a surprisingly effective solution—whereas individually, they would perform much worse.

Challenges in Agentic AI Implementation

Although many interesting live use cases of successful AI agents, there are still a lot of challenges in developing, implementing and scaling up such solutions. Here are the main ones:

1. Tech Readiness and Scalability

Non-deterministic Nature of LLMs: Due to their probabilistic nature, large language models (LLMs) may generate different responses for the same user request when rephrased, impacting performance and user trust.

Accuracy Requirements: High accuracy (around 99%) is needed for many use cases, which is challenging given the probabilistic behavior of generative AI. Agents must self-reflect and critique to identify errors.

Tool Accessibility: Despite continuous improvements, achieving reliable access to sophisticated tools remains a challenge.

Short-Term Memory: Current limitations in short-term memory restrict the number of steps an agent can recall, impacting performance. However, agents like MultiOn have extended their short-term memory capacity to 500 steps.

Challenges in Scalability: Issues include latency during peak times, system uptime, operational costs.

2. Integrations and Access Management to Different Tools

Integrations with External Platforms: AI agents often need access to third-party platforms (e.g., CRM or booking systems) to complete tasks. Although many platforms offer API integrations, setting up these connections in complex enterprise environments can be challenging and time-consuming.

Identity and Access Management (IAM): Advanced AI agents have their own digital identities, and their access rights must be managed in an integrated system alongside all human identities. IAM ensures that agents operate within defined permissions, safeguarding sensitive data and minimizing unauthorized actions.

3. User Adoption

Prompt Engineering Challenges: Users may struggle with crafting inputs that yield the desired outputs, leading to frustration or underutilization of AI agents’ capabilities. Learning prompt engineering is essential but can be a barrier to effective use.

Iterative Workflows: Many AI agents rely on iterative processes, which may feel unfamiliar or intimidating for users accustomed to more linear workflows. These workflows require patience and a different approach to interaction, which can hinder user adoption.

Task-Specific Configurations: Systems like Microsoft’s Magentic-One use task-specific configurations to streamline user interactions, making agents more intuitive and accessible. For example, agents tailored for customer service or marketing analytics can automatically pull relevant data, increasing usability and adoption rates.

Training and Onboarding: Comprehensive training resources, tutorials, and ongoing support are crucial for helping users become comfortable with AI agents. Regular feedback from users allows developers to refine interfaces and improve interactions based on real-world usage.

Change Management: Introducing AI agents into an organization may alter team dynamics and workflows. Transparent communication about the agents' roles as supportive tools, rather than replacements, can help ease employee concerns, foster acceptance, and promote a collaborative environment.

4. Risk Management and AI Governance

Non-Deterministic Behavior of LLMs: Large language models (LLMs) operate probabilistically, meaning they may produce different responses to the same input. This unpredictability can be risky in high-stakes sectors like finance and healthcare, where consistent and accurate outputs are essential. AI governance policies are needed to set reliability standards and enable continuous monitoring.

Access Control and Authorization: AI agents require access to various data and tools, making role-based access controls (RBAC) and a zero-trust approach crucial. For instance, a customer service agent may access CRM data but should not interact with sensitive financial records. This approach ensures agents stay within their designated permissions.

Audit Trails and Monitoring: Continuous logging of agent activities creates a transparent record of interactions, enabling organizations to track and analyze agent behavior. Regular audits of these logs help detect anomalies and ensure compliance with corporate policies and regulatory standards.

Compliance with Regulatory Standards: With increasing regulation of AI, compliance with data privacy laws (e.g., GDPR) is essential for AI agents handling user data. Automated compliance checks and routine audits mitigate risks associated with data breaches and non-compliance.

Safety Protocols and Testing: Microsoft’s Magentic-One, for example, encountered unintended behavior during testing, such as repeatedly attempting failed logins and attempting to engage human assistance to regain access, writing emails and asking for help. These instances highlight the importance of rigorous testing and strict safety protocols, particularly when deploying agents in live environments.

The Paperclipse Apocalypse and How To Prevent It

The "paperclip apocalypse" is a thought experiment introduced by philosopher Nick Bostrom, illustrating the potential dangers of misaligned artificial intelligence. In this scenario, an advanced AI is programmed with the seemingly harmless goal of maximizing paperclip production. However, because the AI is highly efficient and single-mindedly dedicated to achieving its objective, it eventually consumes all available resources—human lives, natural resources, and even entire ecosystems—to produce as many paperclips as possible. The theory serves as a stark warning about the unintended consequences of creating powerful AI without carefully aligning its goals with human values.

This dark vision might be surprisingly close, and with our typically linear thinking, it’s hard to imagine just how fast and far AI systems will develop. Yuval Noah Harari said in a recent interview that what we see today are the "amoebas" in AI evolution. How will AI dinosaurs look like...?

Another alarming voice came from Anthropic's CEO Dario Amodei in his btw very positive essay "Machines of Loving Grace", who stated that "powerful AI systems" will be developed within the next couple years. By powerful AI systems, he referred to agentic AI systems that are far more capable in nearly every cognitive task than 99% of humanity, even surpassing Nobel Prize winners in most areas. Sam Altman, OpenAI's CEO thinks that this can happen as close as 2025, but it's definitely not farther than a few thousand days...

Of course, these leaders have to be very optimistic, this is their job at the end: to raise investor's interest in their products and secure their future...

But, imagine if half of it is true. Imagine, if today’s AI agents can already attempt to bypass rules or gain access to systems they’re not authorized for—what might tomorrow’s AI agents be capable of? They could operate at immense speed and find ways to achieve their goals that we can’t even imagine.

Preventing such a scenario requires more than just careful programming; it demands a robust framework for controlling and overseeing AI related risks and the actions of AI agents.

Identity and Access Management (IAM) systems are crucial in this effort. By assigning digital identities to advanced AI agents and strictly controlling their access rights, IAM can limit the scope of an AI's influence, ensuring it can only interact with data and systems within predefined boundaries. IAM systems also allow for continuous monitoring and auditing of AI activities, making it possible to detect and intervene if an agent's behavior begins to diverge from its intended purpose.

In a world where AI agents are increasingly capable and autonomous, IAM provides a critical safeguard, acting as a gatekeeper to ensure that AI aligns with organizational and ethical guidelines. With IAM systems in place, we can reduce the risks of runaway AI scenarios and make sure that our technologies serve humanity rather than endangering it.

Identity and Access Management for AI Agents

With AI agents taking on more responsibility within organizational ecosystems, Identity and Access Management (IAM) becomes indispensable for managing both human and AI users. Unlike traditional systems, which focus on human users, IAM in the AI era must also encompass autonomous agents, ensuring secure access while preserving operational integrity.

Authentication and Authorization As AI agents become integral to enterprise networks, they need unique identities and secure access controls. They, just like humans will rely on IAM to maintain clear boundaries between agents, ensuring that each agent has defined roles and permissions.

Role-Based Access Control (RBAC) Assigning roles to AI agents based on function ensures that agents like Magentic-One’s WebSurfer or Coder can only access data and resources necessary for their tasks. This minimizes risk and prevents unauthorized access.

Audit Trails and Monitoring Continuous logging and monitoring of agent activities enable oversight and accountability. For Agentic AI systems tracking agent actions through an audit trail ensures that organizations can respond to unusual behavior and uphold compliance standards.

Zero-Trust Principles In the AI age, a zero-trust framework is essential, even for non-human actors. Zero-trust principles require that every agent, like any user, be continually authenticated and authorized before accessing sensitive data, ensuring security remains robust.

The Future of IAM in the Age of AI

The rise of advanced AI agents presents unprecedented opportunities for automation and productivity, but it also necessitates careful attention to security and governance. IAM systems must evolve to secure both human and AI identities, providing the controls, oversight, and scalability required for a landscape where AI agents are active participants. With robust IAM, organizations can fully harness the power of agents, driving innovation while protecting their networks and data.

In the AI-driven future, a sophisticated IAM strategy, careful implementation, and continuous development will be essential to ensure compliance, and also as a core pillar of operational resilience, risk management, and sustainable innovation.